Will AI kill search?: Google disrupting itself, evolving search to follow the user.

Source: Youtube

Will AI kill search?: Google disrupting itself, evolving search to follow the user.

Source: Youtube

IBL News | New York

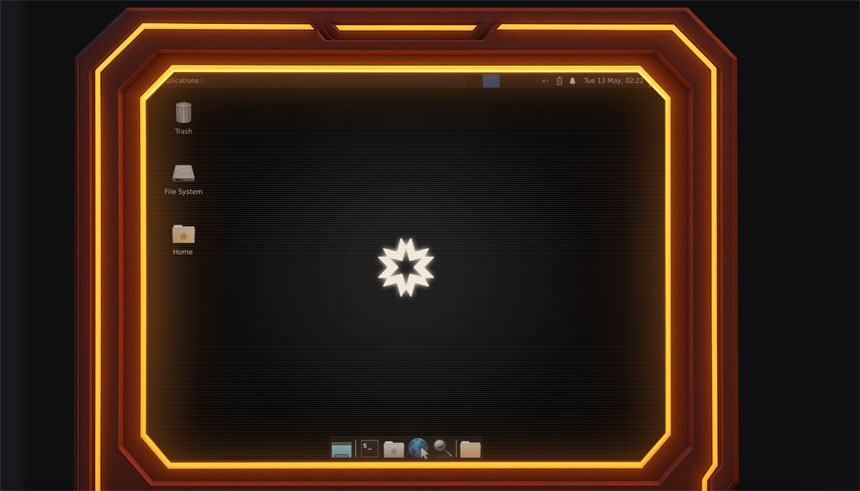

Hugging Face released a free, web-agentic AI tool called Open Computer Agent this month, which behaves similarly to OpenAI’s Operator.

Users can prompt a task, and the agent opens the necessary programs and determines the required steps.

It uses a Linux virtual machine preloaded with several applications, including Firefox. However, as Techcrunch described, “Be forewarned: It’s quite sluggish and occasionally makes mistakes.”

“It often runs into CAPTCHA tests that it’s unable to solve.”

The Hugging Face team’s goal is to demonstrate that open AI models are becoming more capable and cheaper to run on cloud infrastructure, not to build a state-of-the-art computer-using agent, as one of the developers expressed below.

We’re launching Computer Use in smolagents! 🥳

-> As vision models become more capable, they become able to power complex agentic workflows. Especially Qwen-VL models, that support built-in grounding, i.e. ability to locate any element in an image by its coordinates, thus to… pic.twitter.com/mI8MuWZkIS

— m_ric (@AymericRoucher) May 6, 2025

Agentic technology is attracting increasing investment as enterprises look to boost productivity. A recent KPMG survey shows that 65% of companies are experimenting with AI agents. Experts say the AI agent segment might grow from $7.84 billion in 2025 to $52.62 billion by 2030.

Mastering AI means understanding what it does well – and what humans do better.

Source: Youtube

We don’t often think of data as polluting, but what if every scroll, stream, and swipe came with a carbon cost? In this powerful talk, we’re asked to confront the hidden environmental toll of our digital lives – from AI’s massive energy demands to the mountains of e-waste we leave behind.

Source: Youtube

Some travelers are using AI to help plan their dream summer vacations or weekend getaways.

Source: Youtube

Microsoft says it is laying off nearly 3% of its entire workforce, meanwhile Amazon unveiled more than 750-thousand robots it will use to sort, lift and carry packages in the company’s warehouses.

Source: Youtube

A new program, Scholarship Owl, leverages artificial intelligence technology to generate personalized scholarship lists tailored to individual students.

Source: Youtube

The US needs to stay at the forefront of algorithmic advancements, says Konstantine Buhler, partner at Sequoia Capital.

Source: Youtube

IBL News | New York

Harvard University’s President Alan M. Garber will take a voluntary 25% pay cut for the fiscal year 2026, which runs from July 1, 2025, to June 30, 2026, on a salary not disclosed, but presumably upward of $1 million. Several other Harvard’s top officials are making voluntary cuts on their own.

Garber’s pay cut is a gesture to share the financial strain that has hit faculty and staff since the Trump administration froze nearly $3 billion in funding.

Over 80 faculty members — from several schools and academic units — have pledged to donate 10 percent of their salaries for up to a year to support the University if it continues to resist the Trump administration.

In 2020, as provost, Garber took a similar 25% cut in response to the COVID-19 pandemic. Then, President Lawrence S. Bacow and several deans accepted temporary reductions as Harvard confronted a projected $750 million revenue shortfall.

Garber’s decision also mirrors similar moves from leaders of other schools. Brown University President Christina H. Paxson announced last month that she and two other senior administrators would take a 10 percent salary cut in fiscal year 2026.

On Tuesday, the Trump administration announced it would cut another $450 million in federal grants and contracts from Harvard. The Federal Government alleged Harvard had failed to check race-based discrimination and antisemitism. Earlier this month, it pledged to no longer award grants or contracts to the University.

The cut, which covers grants awarded by eight unspecified federal agencies, is in addition to the $2.2 billion funding cut announced last month.

Harvard’s lawsuit against the Trump administration, filed in April, remains in its early stages. Oral arguments are scheduled to begin on July 21, and the legal battle will be drawn out for months.

How artificial intelligence is reshaping education.

Source: Youtube