IBL News | New York

Runway released its most advanced video generator model called Gen-4 for paid and enterprise customers last week.

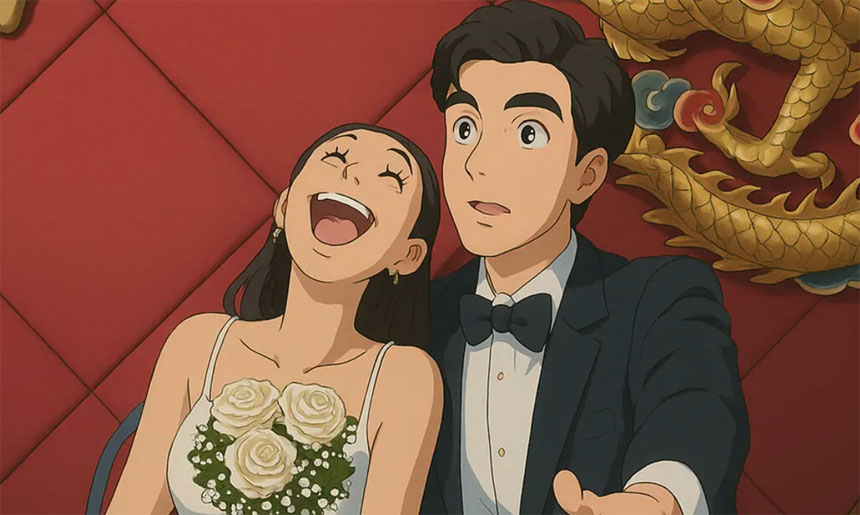

The AI video tools startup claimed it can generate consistent characters, locations, and objects across scenes, maintain coherent world environments, and regenerate elements from different perspectives and positions within scenes “without the need for fine-tuning or additional training.”

To craft a scene, users can provide images of subjects and describe the composition of the shot they want to generate.

On the other hand, Runway AI Inc. announced that it raised $308 million in a new round of funding, which more than doubled the company’s valuation. The deal pushes Runway’s value to just over $3 billion. Private equity firm General Atlantic led the round, which closed late last year. Other investors included Nvidia Corp. and SoftBank Group Corp.’s Vision Fund 2.

The company has been able to differentiate itself, inking a deal with a major Hollywood studio and earmarking millions of dollars to fund films using AI-generated video.

Runway says that Gen-4 allows users to generate consistent characters across lighting conditions using a reference image of those characters.

“Runway Gen-4 [also] represents a significant milestone in the ability of visual generative models to simulate real-world physics,” said the company.

Like all video-generating models, Gen-4 was trained on many video examples to learn the patterns and generate synthetic footage.

Runway refused to say where the training data came from, out of fear of sacrificing competitive advantage and also to avoid IP-related lawsuits.

Today we’re introducing Gen-4, our new series of state-of-the-art AI models for media generation and world consistency. Gen-4 is a significant step forward for fidelity, dynamic motion and controllability in generative media.

Gen-4 Image-to-Video is rolling out today to all paid… pic.twitter.com/VKnY5pWC8X

— Runway (@runwayml) March 31, 2025