IBL News | New York

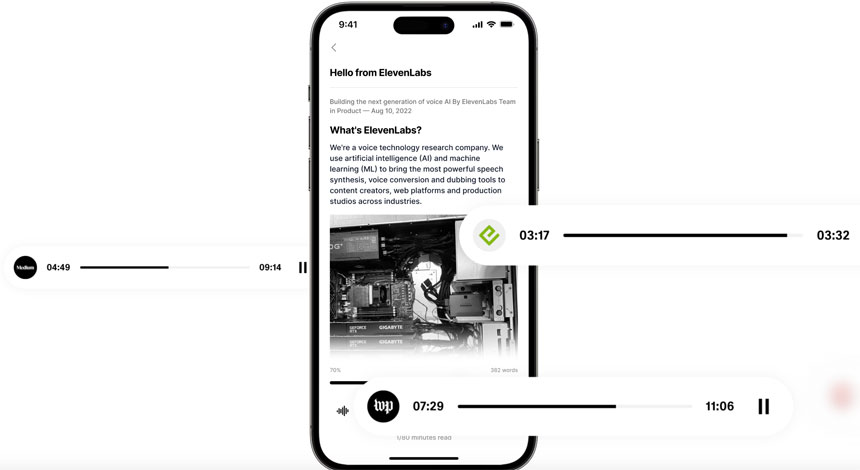

ElevenLabs.io has launched an iOS app that narrates text content, including books, PDFs, ePub, and docs, for users on the go.

The ElevenLabs Reader App allows the user to choose a voice.

This text-to-speech tool is available in English for the U.S., Canada, and the U.K. residents. The company has set a waiting list for Android users.

Available voices include deceased actors like Judy Garland, James Dean, Burt Reynolds, and Sir Laurence Olivier.

“Adding them to our growing list of narrators marks a major step forward in our mission of making content accessible in any language and voice,” said the start-up.

The partnership with the stars’ estates comes two months after ChatGPT-maker OpenAI was criticized for introducing a synthetic voice eerily similar to Scarlett Johansson’s character in “Her.”

Johansson said she was “shocked, angered and in disbelief” that the company would use her likeliness likeness after she turned down a partnership opportunity with OpenAI.

Today we’re launching the ElevenLabs iOS app!

It lets you listen to any article, book, or doc using our AI generated voices.

Check it out 🚀 pic.twitter.com/zQ9ISG8NUn

— Ammaar Reshi (@ammaar) June 25, 2024