IBL News | New York

Microsoft introduced new agent capabilities with its new Copilot Studio platform this week.

These new agents—which will be available later this year—will allow users to orchestrate tasks and functions.

They can work like virtual employees, for example, monitoring email inboxes and automating data entry, which workers typically have to do manually. They are a kind of chatbot that intelligently performs complex tasks autonomously in a proactive way.

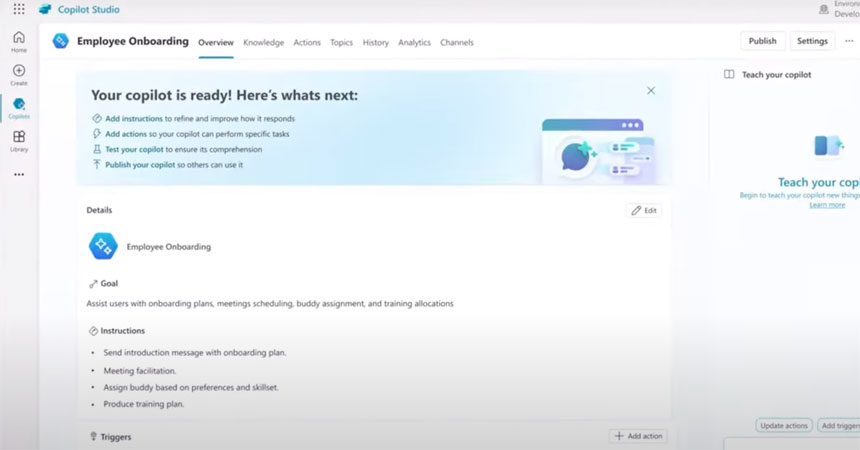

Developers can provide their copilot with a defined task and equip it with the necessary knowledge and actions to run business processes and associated tasks.

Microsoft has launched its Power Platform to orchestrate AI-drive business processes and automate tasks.

The new capabilities allow users to delegate authority to copilots to automate long-running business processes, reason over actions and user input, leverage memory, learn based on user feedback, record exception requests, and ask for help when encountering unfamiliar situations. Copilots can recall past conversations to add relevant context, following tight guardrails.

Here’s how Microsoft describes a potential Copilot for employee onboarding: “Imagine you’re a new hire. A proactive copilot greets you, reasoning over HR data answers your questions, introduces you to your buddy, gives you the training and deadlines, helps you with the forms, and sets up your first week of meetings. Now, HR and the employees can work on their regular tasks without the hassle of administration.”

“We think with Copilot and Copilot Studio, some tasks will be automated completely,” said Microsoft.

A featured example using this Microsoft Power Platform is the Canadian media company Cineplex.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25452335/Copilot_Studio_homepage.png)