IBL News | New York

NVIDIA released eight free AI courses this month. Five are hosted at NVIDIA’s Deep Learning Institute (DLI) platform, two on Coursera, and one on YouTube.

1. Generative AI Explained

2. Building A Brain in 10 Minutes

3. Augment your LLM with Retrieval Augmented Generation

4. AI in the Data Center

5. Accelerate Data Science Workflows with Zero Code Changes

6. Mastering Recommender Systems

7. Networking Introduction

8. Building RAG Agents with LLMs

1. Generative AI Explained

Learn learn how to:

• Define Generative AI and explain how Generative AI works

• Describe various Generative AI applications

• Explain the challenges and opportunities in Generative AIhttps://t.co/JTNyqVZY5v pic.twitter.com/7UC7Ac3IWI— Roni Rahman (@heyronir) March 24, 2024

2. Getting Started with AI on Jetson Nano

Learn how to:

• Set up your Jetson Nano and camera

• Collect image data for classification models

• Annotate image data for regression models

• Train a neural network on your data to create your modelshttps://t.co/LHFbgLIPNQ pic.twitter.com/pjTLnKWyzS— Roni Rahman (@heyronir) March 24, 2024

3. Building A Brain in 10 Minutes

You’ll learn:

• How neural networks use data to learn

• Understand the math behind a neuronhttps://t.co/E9VEKRKWX1 pic.twitter.com/8VPBXseT15— Roni Rahman (@heyronir) March 24, 2024

4. Building Video AI Applications on Jetson Nano:

Learn to:

• Create DeepStream pipelines for video processing

• Handle multiple video streams

• Use alternate inference engines like YOLOhttps://t.co/RuBQDoXMOU pic.twitter.com/PAxhAtfVVm— Roni Rahman (@heyronir) March 24, 2024

5. Augment your LLM Using RAG

• Understand the basics of RAG

• Learn about the RAG retreival process

• Discover NVIDIA AI Foundations and the key components of a RAG model.https://t.co/cG0AbeyxS5 pic.twitter.com/GqjrLjDuuM— Roni Rahman (@heyronir) March 24, 2024

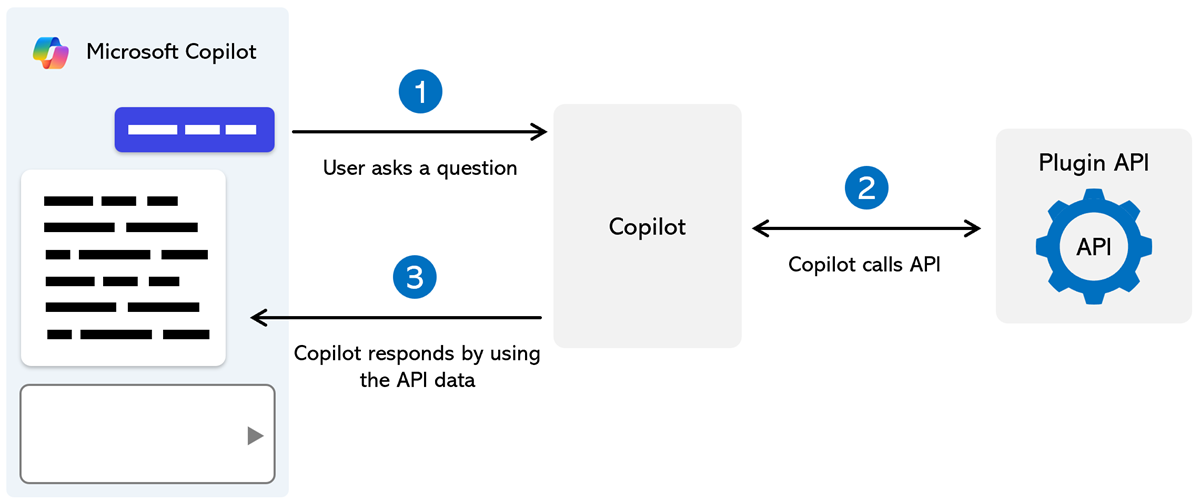

6. Building RAG Agents with LLMs

Learning Objectives:

• Explore scalable deployment strategies

• Learn about microservices and development

• Experiment with LangChain paradigms for dialog management

• Practice with state-of-the-art modelshttps://t.co/eIqjaBgIU2 pic.twitter.com/MeKd2RGU4r— Roni Rahman (@heyronir) March 24, 2024

7. Accelerate Data Science Workflows with Zero Code Changes

In this course you will:

• Learn the benefits of unified CPU and GPU workflows

• GPU-accelerate data processing and machine learning

• See faster processing times with GPUhttps://t.co/UJ9RJkJTWh pic.twitter.com/XoQ1goBncB— Roni Rahman (@heyronir) March 24, 2024

8. Introduction to AI in the Data Center

Learn about AI, machine learning, deep learning, GPU architecture, deep learning frameworks, and deploying AI workloads.

Understand requirements for multi-system AI clusters and infrastructure planning.https://t.co/u96QGB6rVG pic.twitter.com/yLlUWv1e9N

— Roni Rahman (@heyronir) March 24, 2024

[Disclosure: IBL works for NVIDIA by powering its learning platform]