IBL News | New York

OpenAI raised questions about the safety of AI agents operating on the open web, specifically its Atlas AI browser.

The San Francisco-based lab admitted that prompt injections, a type of cyberattack that manipulates AI agents to follow malicious instructions, are often hidden in web pages or emails.

“Prompt injection, much like scams and social engineering on the web, is unlikely ever to be fully ‘solved,’” OpenAI wrote in a Monday blog post, conceding that “agent mode” in ChatGPT Atlas “expands the security threat surface.”

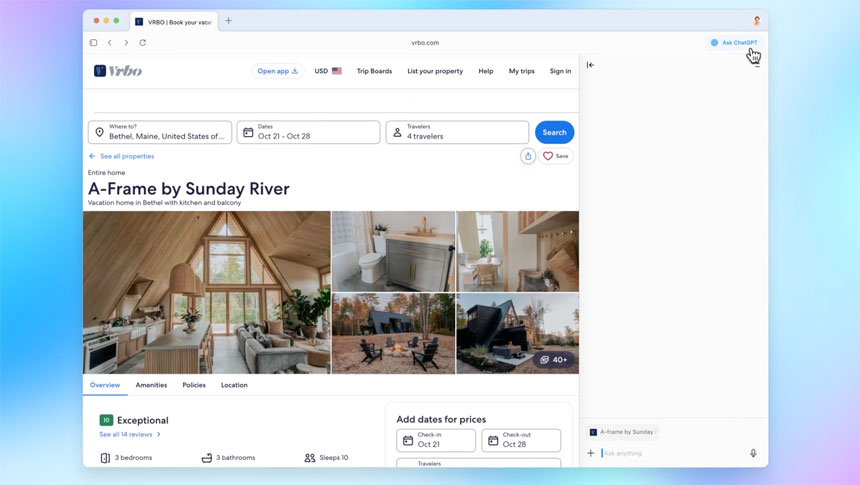

OpenAI launched its ChatGPT Atlas browser in October. Security researchers then showed that writing a few words in Google Docs could change the browser’s behavior.

Also in October, Brave published a blog post explaining that indirect prompt injection is a systematic challenge for AI-powered browsers, including Perplexity’s Comet.

The U.K.’s National Cyber Security Centre warned earlier this month that prompt injection attacks against generative AI applications “may never be totally mitigated,” putting websites at risk of data breaches.

In a demo, OpenAI showed how its automated attacker slipped a malicious email into a user’s inbox, sending a resignation message instead of drafting an out-of-office reply.