IBL News | New York

Databricks released yesterday an improved version of its open-source, free-to-commercialize, large language model (LLM) with 12 billion parameters, called Dolly 2.0.

Based on the EleutherAI pythia model family, Dolly 2.0 has been “fine-tuned exclusively on a new, high-quality human-generated instruction following dataset, crowdsourced among Databricks 5,000 employees during March and April of 2023.,” according to the company.

“We are open-sourcing the entirety of Dolly 2.0, including the training code, the dataset, and the model weights, all suitable for commercial use. This means that any organization can create, own, and customize powerful LLMs that can talk to people, without paying for API access or sharing data with third parties.”

Under the licensing terms (Creative Commons Attribution-ShareAlike 3.0 Unported License), anyone can use, modify, or extend this dataset for any purpose, including commercial applications.

databricks-dolly-15k on GitHub contains 15,000 high-quality human-generated prompt / response pairs specifically designed for instruction tuning large language models.

The first version of Dolly was trained using a dataset created by the Stanford Alpaca team created using the OpenAI API. That dataset contained output from ChatGPT, and that prevented commercial use, as it would compete with OpenAI.

“As far as we know, all the existing well-known instruction-following models (Alpaca, Koala, GPT4All, Vicuna) suffer from this limitation, prohibiting commercial use. To get around this conundrum, we started looking for ways to create a new dataset not “tainted” for commercial use.”

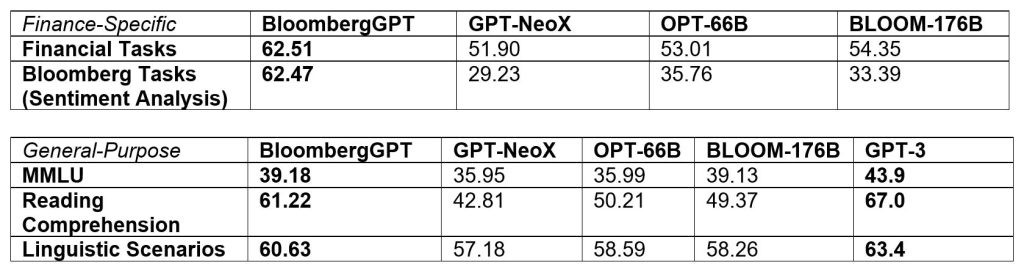

Databricks said that “it doesn’t expect Dolly to be state-of-the-art in terms of effectiveness.”

“However, we do expect Dolly and the open source dataset will act as the seed for a multitude of follow-on works, which may serve to bootstrap even more powerful language models.”

• Download Dolly 2.0 model weights at Databricks Hugging Face page

• Dolly repo on databricks-labs with databricks-dolly-15k dataset.