IBL News | New York

Nvidia presented an open-source humanoid robot called Isaac GR00T N1 at its annual conference yesterday.

The company said it was “the world’s first open, fully customizable foundation model for generalized humanoid reasoning and skills.”

“The age of generalist robotics is here,” said Jensen Huang, founder and CEO of NVIDIA. “With Isaac GR00T N1 and new data-generation and robot-learning frameworks, robotics developers everywhere will open the next frontier in the age of AI.”

Newton, an open-source physics engine — under development with Google DeepMind and Disney Research — purpose-built for developing robots.

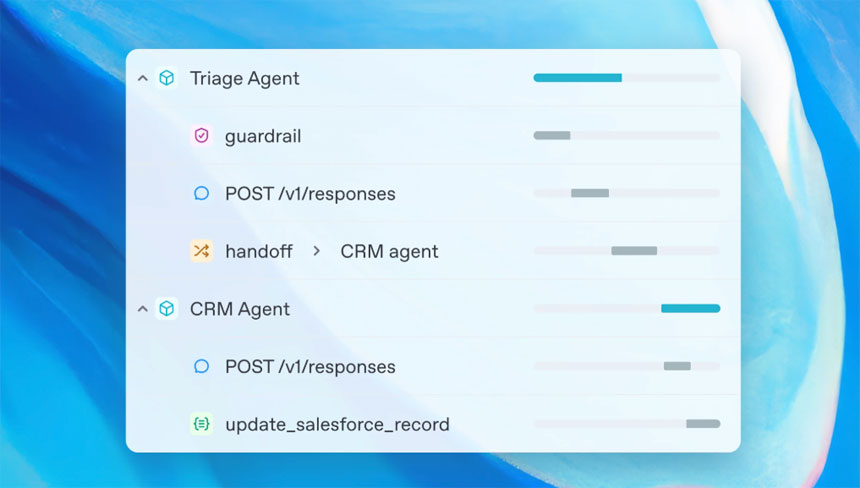

The GR00T N1 foundation model features a dual-system architecture inspired by human cognition. System 1 is a fast-thinking action model that mirrors human reflexes or intuition, while System 2 is a slow-thinking model for deliberate, methodical decision-making.

The chip company said GR00T N1 could generalize everyday tasks — such as grasping, moving objects with one or both arms, and transferring items from one arm to another — or perform multistep tasks requiring extended context and combinations of general skills. These capabilities can be applied to material handling, packaging, and inspection cases.

Developers and researchers can post-train GR00T N1 with real or synthetic data for their specific humanoid robot or task.

In his GTC keynote, Huang demonstrated 1X’s humanoid robot autonomously performing domestic tidying tasks using a post-trained policy built on GR00T N1. The robot’s autonomous capabilities are the result of an AI training collaboration between 1X and NVIDIA.

Among the additional leading humanoid developers worldwide with early access to GR00T N1 are Agility Robotics, Boston Dynamics, Mentee Robotics, and NEURA Robotics.

NVIDIA announced GR00T N1, the first fully customizable open-source humanoid robot foundation model, designed to advance general-purpose robotics.

⦿ A dual-system AI inspired by human cognition: System-1 handles fast, intuitive actions, while System-2 enables methodical… pic.twitter.com/qb3gVlMv1o

— The Humanoid Hub (@TheHumanoidHub) March 18, 2025