IBL News | New York

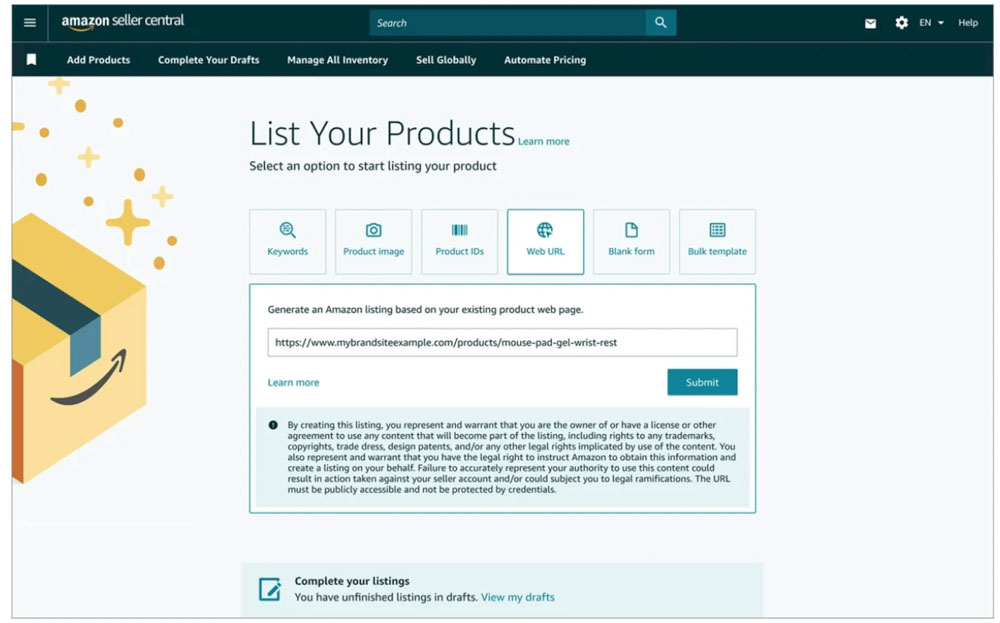

Amazon will release an AI feature that will allow sellers by providing a URL to easily generate high-quality product detail pages, with a written description and images tailored to the store.

The goal is to help sellers reduce the time it takes to bring the product from a different website onto Amazon, said Mary Beth Westmoreland, Amazon’s VP of worldwide selling partner experience, in a blog post.

Previously, creating product pages required significant effort from sellers to develop and input accurate and comprehensive product descriptions that attract customers.

Sellers simply upload an image of their product and use generative AI to automatically generate their product title, description, and even more product attributes.

These features can also suggest attributes such as color and keywords to help effectively index the product in customer search experiences.

Amazon said that the majority of AI-generated listings are being submitted, with sellers accepting suggested attributes nearly 80% of the time with minimal edits.

“We’ve compared the AI-generated content to non-AI generated content and found improvements across measures of clarity, accuracy, and detail, which can increase a product’s discoverability when customers search in Amazon’s store,” said the company.

The feature is rolling out now and will be available to US sellers in the coming weeks.

Amazon has been releasing a lot of AI tools over the past few months. For sellers, Amazon released AI tools to generate photos and create product listing text.

For shoppers, Amazon unveiled Rufus, an AI chatbot designed to answer buyers’ questions about items, suggest similar products and compare models.

.