IBL News | New York

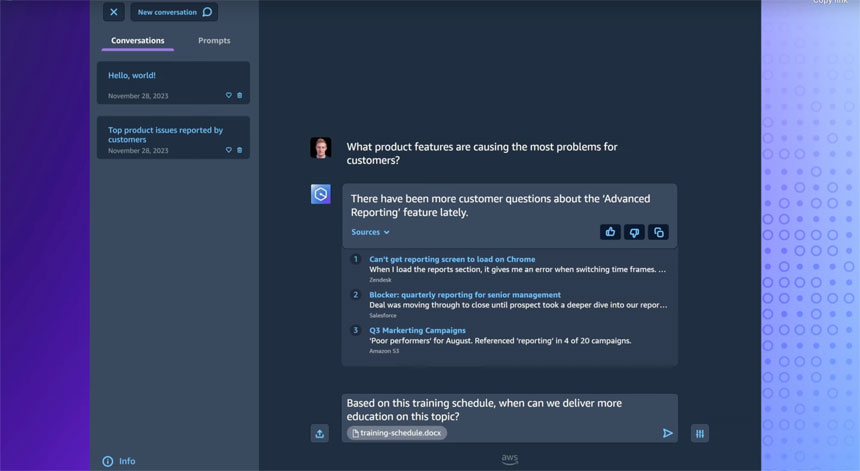

Quora, the company behind the Poe.com chatbot, said it intends to create a new market allowing bot creators in areas such as tutoring, knowledge, therapy, entertainment, analysis, storytelling, roleplay, image, video, and music to generate revenue.

With this stated goal, this month, Poe.com launched this month a Creator monetization program to support both prompt bots and server bots created by developers who write code and integrate with the platform API.

“For anyone training their own models and running their own inference, or using a third-party AI service through an API, operating a bot can entail significant infrastructure costs, and we want them to be able to operate sustainably and profitably,” said Adam D’Angelo, CEO at Quora, the company behind Poe.com.

Poe.com’s monetization structure, currently available only in the US, has two components:

- If a bot causes a user to subscribe to Poe (measured a few ways), the company will share a cut of the revenue they pay.

- The bot generator can set a per-message fee and Poe will pay that on every message.

Users can get started at poe.com/creators, or learn about creating a bot at developer.poe.com.

Today we are launching creator monetization for Poe! This program lets any bot creator on Poe generate revenue. This is a major step forward for the platform and is the first program of its kind, so we are very excited to see what it lets everyone create. (thread) pic.twitter.com/feLjB62hYo

— Adam D’Angelo (@adamdangelo) October 25, 2023

🚀 “Introducing Creator Monetization for Poe” on Quora Blog 🎉

Quora has unveiled a new feature allowing bot creators on Poe to generate revenue. This significant advancement supports both prompt bots made directly on Poe and server bots developed by coders integrated with the… pic.twitter.com/IgcoDc0rig

— AI Daily Guy (@AIDailyGuy) October 26, 2023