IBL News | New York

The finance and HR management company Workday (NASDAQ: WDAY) announced this week, during its annual customer conference in San Francisco, that it will embed in its platform a suite of new generative AI features aimed at increasing productivity, retain talent, and streamline business processes.

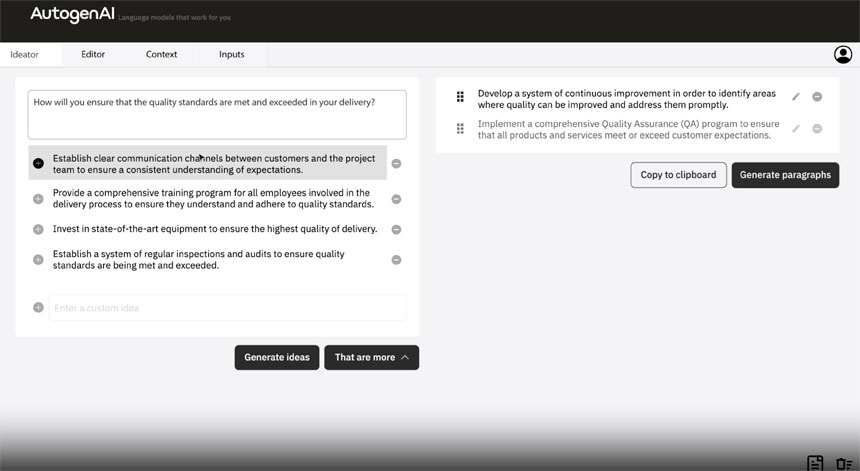

The Workday generative AI capabilities will include:

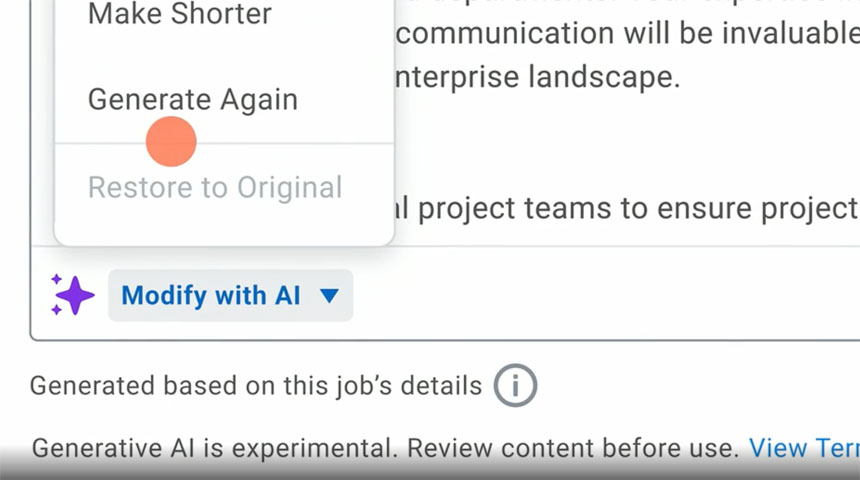

• Generating job descriptions for hiring managers and recruiters.

• Analyzing and correcting contracts for sales and finance teams, comparing them against signed contracts.

• Creating personalized knowledge management articles to keep employees informed about company policies and updates, improving the tone or length of the article, and translating them into different languages.

• Automating the process of crafting past-due notices with recommendations to recapture missing funds sooner

• Turning text-to-code to build custom apps faster. Its Developer Copilot tool, similar to code-generating services like GitHub Copilot and Amazon CodeWhisperer, will be natively embedded into Workday’s App Builder.

• Creating employees’ work reports and retention plans, with a summary of their strengths and areas of growth. Data will be pulled from performance reviews, employee feedback, contribution goals, and skills.

• Generating SoW for service procurement.

• Providing conversational AI for users for summarization, search, maintaining context, etc.

These new generative AI features for job descriptions, contract analysis, knowledge management, collections letters, app development, employee growth plans, and statements of work are expected to begin rolling out within the next six to twelve months, according to the company.

“Generative AI has the potential to completely transform work as we know it,” said Sayan Chakraborty, Co-President at Workday.

TechCrunch analysis detected many potential problems with Workday’s approach, especially regarding the employees’ performance reports.