AI influencers compete for likes and lucrative brand deals on social media.

Source: Youtube

AI influencers compete for likes and lucrative brand deals on social media.

Source: Youtube

Elon Musk tried to block Sam Altman’s big AI deal in the UAE.

Source: Youtube

Professors turn to old-fashioned methods to beat AI-generated work.

Source: Youtube

AI didn’t just learn to think, it learned to save lives.

Source: Youtube

In her thought-provoking talk, Beata explores how artificial intelligence is transforming the creative world—raising questions of ownership, emotion, and authenticity.

Source: Youtube

AI could wipe out half of all entry-level white-collar jobs, CEO warns.

Source: Youtube

IBL News | New York

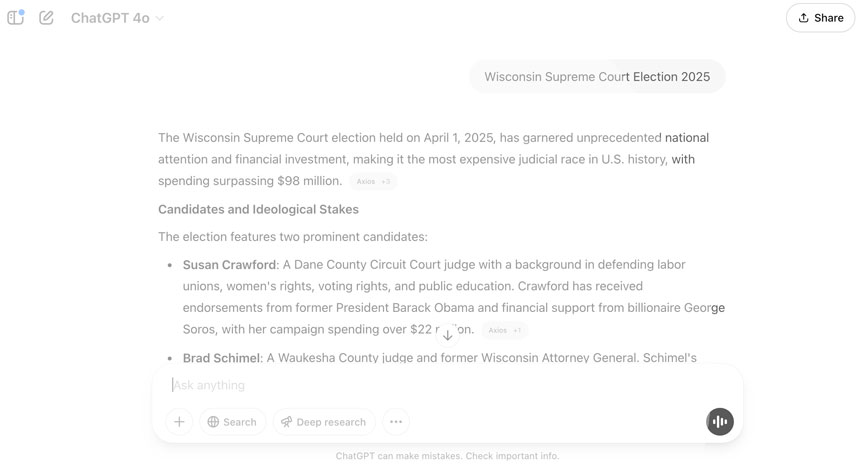

What is the number two chatbot in terms of traffic in the world?

There is fierce competition among players. However, they all pale compared to ChatGPT, which surged to 500 million weekly active users in late March.

Google’s Gemini web traffic increased to 10.9 million average daily visits in March, up 7.4% month-over-month.

At the same time, the number of Microsoft OpenAI-powered Copilot users increased to 2.4 million, up 2.1 percent from February.

In March, Anthropic’s Claude reached an average of 3.3 million daily visits, xAI’s Grok averaged 16.5 million, and Chinese DeepSeek received 16.5 million visits that month.

In terms of apps, the consultant Sensor Tower highlighted the case of the Claude app, which saw a 21% week-over-week increase in weekly active users during the week of February 24, when Anthropic released its latest model, Claude 3.7 Sonnet.

Also, Google’s Gemini 2.0 Flash saw its app’s weekly active users grow by 42%. Google brought a “canvas” feature to Gemini that lets users preview the output of coding projects.

Elon Musk shares his insights on artificial intelligence.

Source: Youtube

How does the Wake County Public School System plan to take on AI?

Source: Youtube

What to know about AI assistants and how they’re changing personal productivity.

Source: Youtube