IBL News | New York

Harvard University unveiled a study conducted in the fall of 2023 showing that student engagement in the classroom doubled when an AI Tutor was tailored to a physics course.

This tool helped students learn more material. They also self-reported significantly more engagement and motivation to learn.

These preliminary findings have inspired other large Harvard classes to test their approach, The Harvard Gazette wrote. More specifically, the Derek Bok Center for Teaching and Learning is collaborating with Harvard University Information Technology to pilot similar AI chatbots in a handful of large introductory courses this fall.

Harvard University is also developing resources to enable any instructor to integrate tutor bots into their courses.

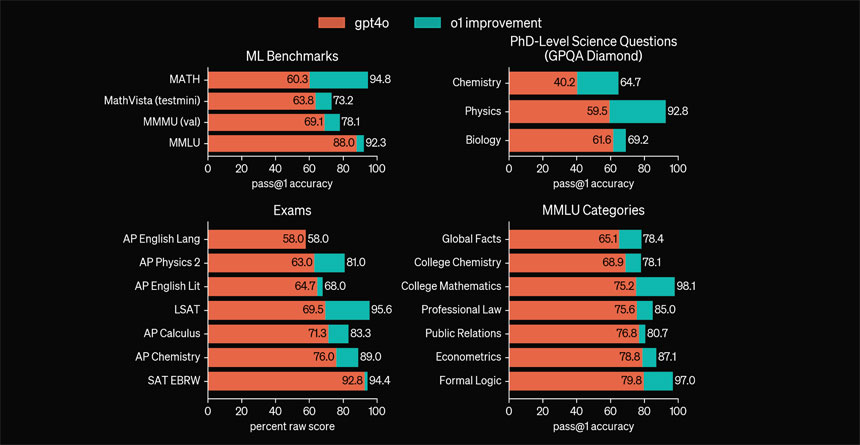

This custom-designed AI chatbot proved surprisingly more effective than a typical “active learning” classroom setting in which students learn from a human instructor as a group.

The study was led by lecturer Gregory Kestin and senior lecturer Kelly Miller. They analyzed the learning outcomes of 194 students enrolled last fall in Kestin’s Physical Sciences 2 course, which is physics for life sciences majors.

“We went into the study extremely curious about whether our AI tutor could be as effective as in-person instructors,” Kestin, who also serves as associate director of science education, said. “And I certainly didn’t expect students to find the AI-powered lesson more engaging.”

“It was shocking and super exciting,” Miller said, considering that PS2 is already “very, very well taught.”

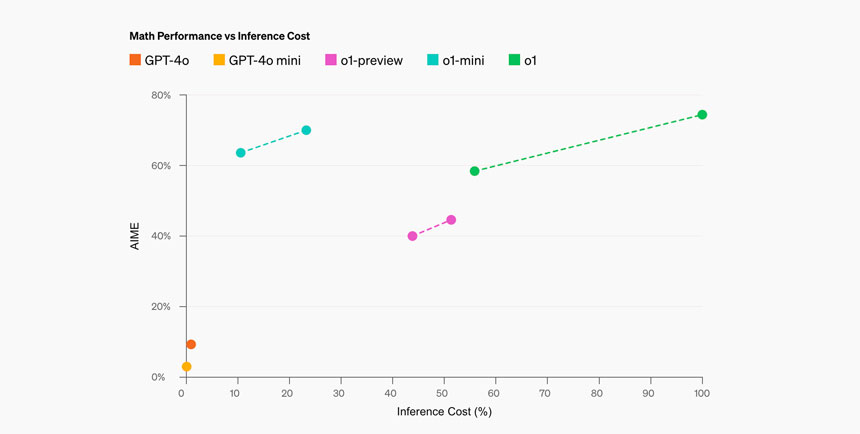

The researchers wrote in their paper that the experiment shows the advantage of using AI tutoring as students’ first substantial introduction to challenging material. “If AI can be used to effectively teach introductory material to students outside of class, this would allow “precious class time” to be spent developing higher-order skills, such as advanced problem-solving, project-based learning, and group work.”

However, Kestin and Miller warned about potential misuses:

“AI tutors shouldn’t ‘think’ for students but help them build critical thinking skills. AI tutors shouldn’t replace in-person instruction, but help all students better prepare for it — and possibly in a more engaging way than ever before.”

“Students with a very strong background in the material may be less engaged, and they’re sometimes bored,” Miller said. “Students who don’t have the background sometimes struggle to keep up. So the fact that this AI tutor can support that difference is probably the biggest thing.”

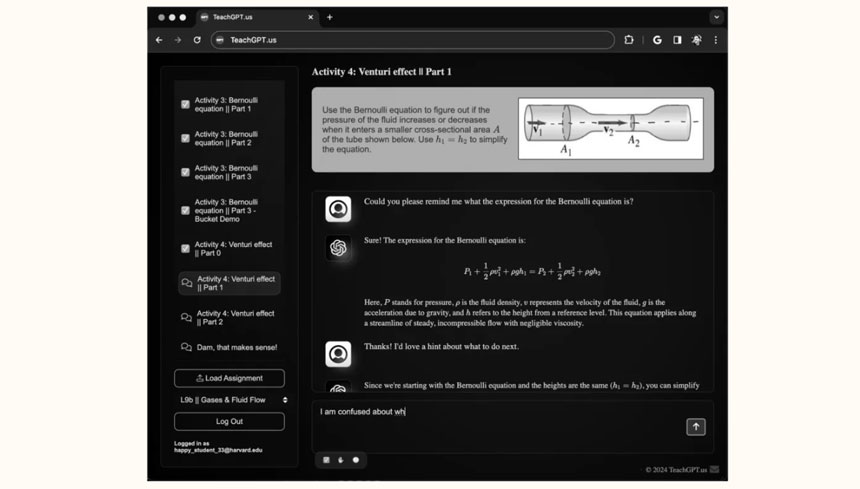

The website that hosts the tutor was built on ChatGPT. Still, rather than defaulting to ChatGPT behavior, the custom tutor provided users with information guided by research-based and refined prompt engineering and “scaffolding’ to ensure the lessons were accurate and well-structured.

Mathematics instructor Eva Politou will introduce a version of this AI tutor to Math 21a (Multivariable Calculus) this fall. Every week, students will generate questions about a specific topic and search for answers with the AI tutor as a guide.

“The primary goal of the AI tutor is to promote an inquiry-based studying method,” Politou explained. “We want students to practice generating questions, critically approaching real-life scenarios, and becoming active agents of their own understanding and learning.”