IBL News | New York

Harvard University announced the release of a high-quality dataset of one million public-domain books this month.

This dataset includes books scanned as part of the Google Books project, which are no longer protected by copyright. It spans genres, decades, and languages, with classics from Shakespeare, Charles Dickens, and Dante.

It was created by Harvard’s newly formed Institutional Data Initiative with funding from both Microsoft and OpenAI.

Anyone can use this dataset to train LLM and other AI tools.

In addition to the trove of books, the Institutional Data Initiative is also working with the Boston Public Library to scan millions of articles from different newspapers now in the public domain, and it says it’s open to forming similar collaborations.

Other new public-domain datasets, like Common Corpus, are also available on the open-source AI platform Hugging Face.

It contains an estimated 3 to 4 million books and periodical collections.

It was rolled out this year by the French AI startup Pleis, and the French Ministry of Culture backs it.

Another one is called Source.Plus. It contains public-domain images from Wikimedia Commons as well as a variety of museums and archives.

Several significant cultural institutions have long made their archives accessible to the public as standalone projects, like the Metropolitan Museum of Art.

Ed Newton-Rex, a former executive at Stability AI who now runs a nonprofit that certifies ethically-trained AI tools, says the rise of these datasets shows that there’s no need to steal copyrighted materials to build high-performing and quality AI models.

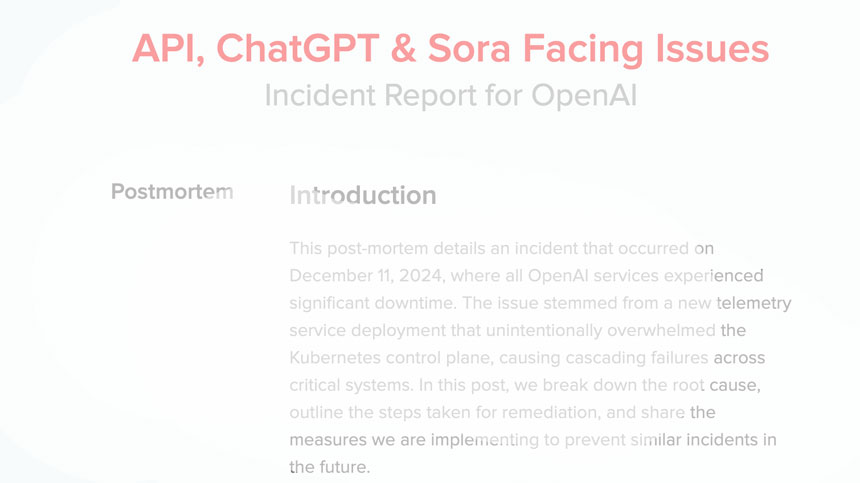

OpenAI previously told lawmakers in the United Kingdom that it would be “impossible” to create products like ChatGPT without using copyrighted works.

“Large public domain datasets like these further demolish the ‘necessity defense’ some AI companies use to justify scraping copyrighted work to train their models,” Newton-Rex said to Wired.