IBL News | New York

McKinsey & Company this month unveiled its generative AI chatbot named Lilli, designed to summarize key points and provides relevant content to its partner consultants and clients.

Lilli has been in beta since June 2023, used by 7,000 employees as a “minimum viable product” (MVP), answering 50,000 questions. It will be rolling out across McKinsey this fall.

The chat application is named after Lillian Dombrowski, the first woman McKinsey hired for a professional services role back in 1945,

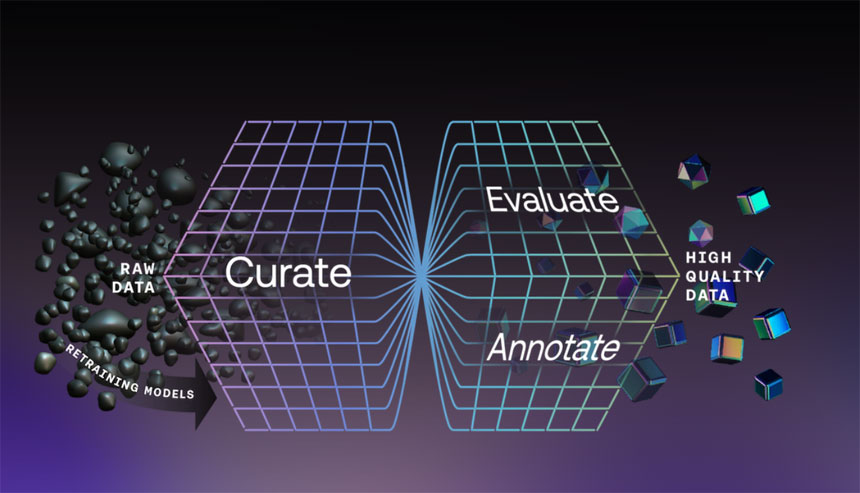

The tool accesses the firm’s extensive knowledge base, with over 100,000 documents, interview transcripts, and resources from 40 curated sources and experts in 70 countries.

“Lilli aggregates our knowledge and capabilities in one place for the first time and will allow us to spend more time with clients activating those insights and recommendations and maximizing the value we can create,” said Erik Roth, a senior partner with McKinsey.

“I use Lilli to look for weaknesses in our argument and anticipate questions that may arise,” said Adi Pradhan, an associate partner at McKinsey. “I also use it to tutor myself on new topics and make connections between different areas on my projects.”

With 30,000 employees, McKinsey & Company is one of the largest consulting agencies in the world.

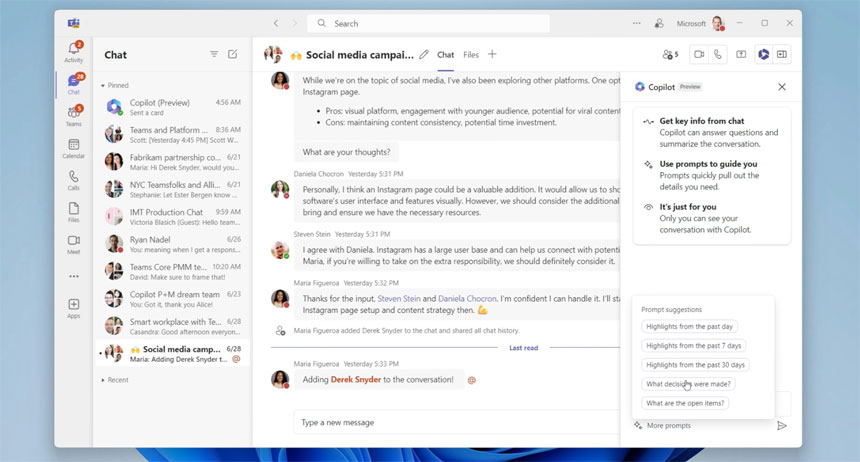

With an interface similar to ChatGPT and Claude 2, Lilli contains an expandable left-hand sidebar with saved categorized prompts, according to a report in VentureBeat.

It includes two tabs that a user may toggle between, one, “GenAI Chat”, that sources data from a more generalized large language model (LLM) backend, and another, “Client Capabilities”, which sources responses from McKinsey’s corpus of documents, transcripts, and presentations. Lilli goes full attribution by citing its sources at the bottom of every response, along with links and even page numbers to specific pages.

McKinsey’s chatbot leverages models developed by Cohere and OpenAI on the Microsoft Azure platform, although the firm insists that its tool is “LLM agnostic” and is constantly exploring new LLMs.

• Report by McKinsey: The state of AI in 2023: Generative AI’s breakout year

• Paul Tocatilan on LinkedIn: Democratization of Mentors

“This sortie officially enables the ability to develop AI/ML agents that will execute modern air-to-air and air-to-surface skills that are immediately transferrable to other autonomy programs,” said Col. Tucker Hamilton, chief, AI Test, and Operations, for the Department of the Air Force.

“This sortie officially enables the ability to develop AI/ML agents that will execute modern air-to-air and air-to-surface skills that are immediately transferrable to other autonomy programs,” said Col. Tucker Hamilton, chief, AI Test, and Operations, for the Department of the Air Force.