IBL News | New York

AI chipmaker and cloud services start-up Cerebras Systems filed its prospectus for an IPO on the Nasdaq last month.

However, the news agency Reuters reported yesterday that the company will likely postpone its IPO after facing delays with a U.S. national security review on UAE-based tech conglomerate G42’s minority investment in the AI chipmaker.

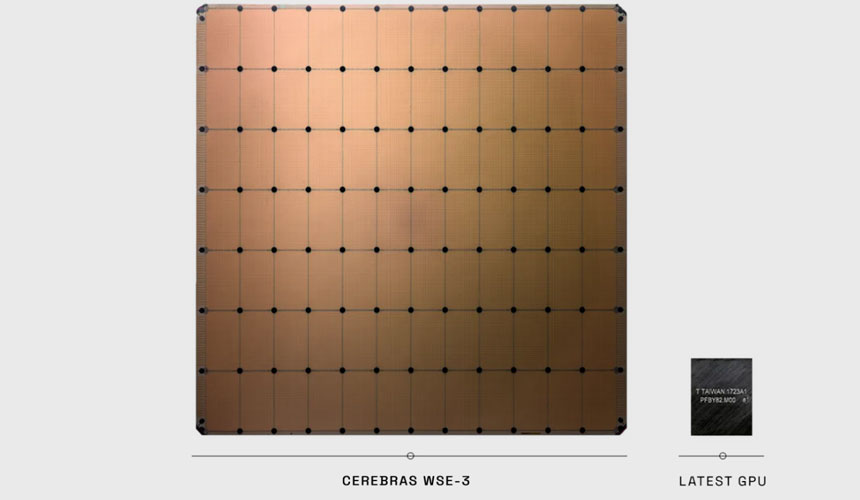

Cerebras competes with industry leader Nvidia in the lucrative artificial intelligence chip market.

The Sunnyvale, California-based company—which will trade under the ticker symbol “CBRS”—seeks to capitalize on investor excitement for AI by conducting a hot IPO (Initial Public Offering).

The company said its WSE-3 chip has more cores and memory than Nvidia’s popular H100.

Nvidia’s graphics processing units are the industry’s choice for training and running AI models. But the AI semiconductor market is getting more crowded.

Amazon, Google, and Microsoft are developing AI chips for their cloud services. AMD and Intel are also in this market.

Taiwan Semiconductor Manufacturing Company makes the Cerebras chips. Cerebras warned investors that any possible supply chain disruptions may hurt the company.

According to the filing, Cerebras had a net loss of $66.6 million on $136.4 million in sales in the first six months of 2024.

The company reported a net loss of $127.2 million on revenue of $78.7 million for the full year of 2023.

Last year, 83% of Cerebras’s revenue came from G42, a UAE-based AI firm that counts Microsoft as an investor.

According to the filing, in May, G42 committed to purchasing $1.43 billion in orders from Cerebras before March 2025. G42 currently owns under 5% of Cerebras’ Class A shares, and the firm can purchase more depending on how much Cerebras product it buys.

The largest investor in Cerebras is venture firm Foundation Capital, followed by Benchmark and Eclipse Ventures. According to the filing, Alpha Wave, Coatue, and Altimeter each own at least 5%.

Other investors include OpenAI CEO Sam Altman and Sun Microsystems co-founder Andy Bechtolsheim. The only individual who owns 5% or more is Andrew Feldman, the startup’s co-founder and CEO.

Just 82 companies went public in the U.S. in the first half of the year, a slight uptick from last year.

Even though conditions to go public are good — interest rates are falling, and tech stocks are trading up —

Many companies are pushing I.P.O. plans to 2025, hoping to avoid any market volatility caused by the presidential election. “It’s almost like a wait-and-see environment,” said one analyst.

[Disclosure: ibl.ai, the parent company of iblnews.org, has NVIDIA as a client]