Artificial intelligence is showing up everywhere — even in the fields. And the University of Illinois is ahead of the pack when it comes to applying AI technology to the farm.

Source: Youtube

Artificial intelligence is showing up everywhere — even in the fields. And the University of Illinois is ahead of the pack when it comes to applying AI technology to the farm.

Source: Youtube

IBL News | New York

AI-powered dolls are helping seniors in South Korea to combat loneliness.

These companion robots are produced by a local startup called Hyodol, named after the Confucian value of caring for elders.

The cherished robots, priced at the equivalent of $1,150 each, strike up conversations using ChatGPT.

They remind elders to take their medication or eat a meal. They can also alert social workers and families during emergencies or encourage seniors by saying, “Grandma, I miss you even when you’re by my side.”

If an infrared sensor on its neck detects no movement for 24 hours, it alerts the team. A microphone in its chest records the user’s answers to daily questions, such as “How are you feeling today?” and “Are you in pain?”

South Korea, a rapidly aging nation, hosts many adults profoundly lonely, with many suffering from depression, dementia, and chronic illnesses. Suicidal rates are among the highest in developed nations.

What most fear is not death but loneliness, say experts.

Currently, there are over 12,000 Hyodols robots distributed across the country.

Korea’s challenges are mirrored in other developed nations.

• In Japan, Paro, a pet robot, provides companionship to older adults.

• In apartments across New York City, ElliQ, an AI robot resembling a Pixar lamp, discusses the meaning of life.

• In Singapore, humanoid robot Dexie leads bingo sessions at senior care facilities.

As a company, Hyodol is aiming for a U.S. debut in 2026.

The eldercare robot market is projected to hit $7.7 billion by 2030, according to Research and Markets, a market research firm.

One of the most common forms of breast cancer is nearly impossible to detect.

Source: Youtube

Artificial intelligence is rapidly changing how knowledge is created, shared, and debated across higher education.

Source: Youtube

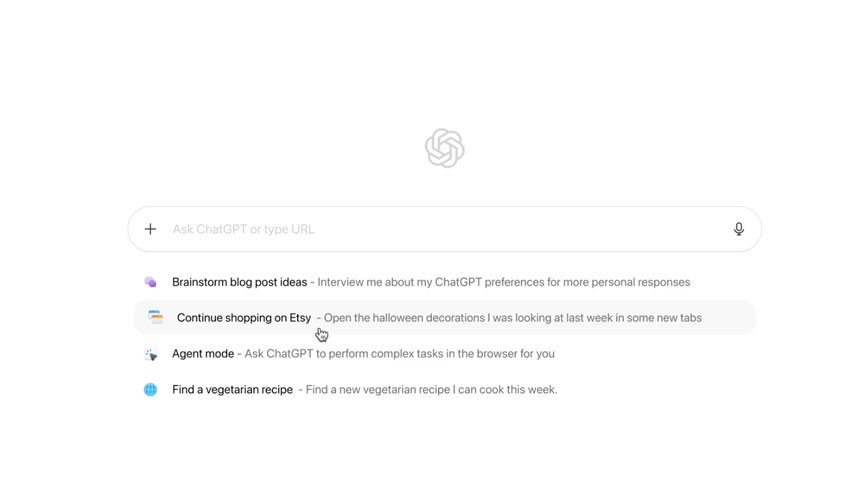

Artificial intelligence company OpenAI is diving into the web browser space with a direct challenge to Google Chrome called ChatGPT Atlas.

Source: Youtube

In the last few years, video and other content created with artificial intelligence have begun to flood almost every part of the internet. It has appeared everywhere from Spotify to the Kindle Store. But on social media, it is almost unavoidable.

Source: Youtube

Amazon is taking steps to address how artificial intelligence and automation is changing its workforce.

Source: Youtube

IBL News | New York

OpenAI introduced a new free web browser for macOS yesterday, built with ChatGPT at its core.

This browser, named ChatGPT Atlas, follows the company’s introduction of a web search in ChatGPT last year; today, it is one of the most used features.

Atlas allows the user to complete tasks on the browser without copying and pasting or leaving the page. The Windows, iOS, and Android versions “are coming soon.”

ChatGPT Atlas understands what the user is looking at and stores this information in its memory, allowing conversations to draw on past chats.

It also remembers history and context from the sites visited. It means that, for example, the user can ask: “Find all the job postings I was looking at last week and create a summary of industry trends so I can prepare for interviews.

Atlas can also work while browsing using agent mode, automating tasks, researching and analyzing, and planning events or booking appointments as you browse. This feature is available in preview for Plus, Pro, and Business users.

This feature of the ChatGPT agent comes natively in Atlas.

OpenAI warns about the risks of agents as they might hide malicious instructions in a website or email, overriding the ChatGPT agent’s intended behavior. “This could lead to stealing data from sites you’re logged into or taking actions you didn’t intend.”

As outlined in the ChatGPT agent system card, we’ve run thousands of hours of focused red-teaming and have placed a particular emphasis on safeguarding ChatGPT from such attacks, including designing our safeguards so they can be quickly adapted to novel attacks.

“Our safeguards will not stop every attack that emerges as AI agents grow in popularity.”

AI safety creates growing regulatory rift between Anthropic, White House and others.

Source: Youtube